Apple (NASDAQ:AAPL) revealed its vision for generative artificial intelligence at its Worldwide Developer Conference, which kicked off June 10. Microsoft (MSFT) had already done so in its Build developer conference on May 21, and in the unveiling of the new Copilot+ PC. Microsoft’s AI emphasis is still the cloud, where it has made enormous investments and can leverage the work of its partner, OpenAI. As always, Apple is going its own way in AI, intending to offer its platforms infused with useful intelligence that’s mostly on-device. Both companies see AI as the next revolution in the personal computing user interface.

How the AI competition became the new user interface battle

I don’t think it’s an exaggeration to say that ChatGPT, which came out in late 2022, was like an H-bomb going off in the middle of the personal computing world. Here, finally, was an AI that one could chat with, via text inputs, that seemed genuinely intelligent and knowledgeable.

The compute resources required to train a generative pre-trained transformer (GPT) AI are enormous and getting bigger all the time. Nvidia (NVDA), a personal favorite of mine, was perfectly positioned to provide the necessary compute hardware. At least for now, Nvidia remains the company that has financially benefited most from GPTs.

For the major platform companies, Apple, Microsoft, and others, 2023 became a year of experimentation, trying to see what GPTs were good for. Microsoft populated its vast software offerings with GPT-based Copilots as “previews.”

Apple seemed to hang back, adding modest upgrades to the on-device AI that it has offered for years, ever since Face ID was introduced in iPhone X in 2017. Since 2017, Apple has incorporated specialized AI accelerators it calls Neural Engines in every iPhone processor and in all the M-series Apple Silicon Macs.

But Apple’s on device AI couldn’t run anything like a ChatGPT, which lives in the cloud, and Apple’s AI expertise has been largely ignored or minimized by media pundits. For instance, Bloomberg’s Mark Gurman seriously underestimated what Apple would be able to show this year at WWDC in AI.

Over the past year, both Microsoft and Apple apparently came to the conclusion that GPTs represented the next big advance in the computer user interface. Properly trained and implemented, the GPT could become the computer. As Microsoft CEO Satya Nadella said during the Build keynote, instead of the user trying to understand the computer, the computer would now understand the user.

Well, I think “understand” is a bit of a stretch. I’m not convinced that GPTs have anything like human understanding, even if they give that impression. And even after training on vast amounts of data, such as most of what’s available on the Internet, GPTs can still make mistakes that humans would not. GPTs can confabulate, essentially making up incorrect answers to queries.

Despite this limitation, the appeal of the GPT as a computer interface was irresistible for both companies, and both have committed to endowing their operating systems with GPT-based user interfaces.

Ideally, the user would interact with the GPT to control the computer, rather than typing commands or executing computer code in apps. Inputs would be in the form of natural language, either text or speech, and the GPT would understand context, including what was being displayed on the computer screen.

This capability is seen as spanning all levels of personal computing, from the smartphone to the workstation. So, if a user with a smartphone wanted to call an Uber, the user would just ask the GPT to do that, rather than opening the Uber app and providing the necessary instructions. The GPT would know the user’s location from the smartphone as well as the user’s payment data, etc.

For a GPT to be really useful as a computer interface, the GPT has to be able to access all sorts of data about the user, including usage history and personal information. This brings up all manner of privacy concerns. This is a key area of differentiation between the Apple and Microsoft approaches.

But both companies have realized that the only way to ensure maximum data privacy is to keep the data on the device. Thus, both companies use GPTs that can run exclusively on the device. Another key point of differentiation is what happens when on-device AI isn’t enough.

So the stage is now set for a battle over this next computer user interface, akin to the battle over the graphical user interface in the 80s and 90s. Let the battle be joined.

What Apple is facing: Microsoft’s Copilot+ PC

By virtue of its association and part ownership of OpenAI, Microsoft had an inside track on GPT technology. It didn’t have to hire OpenAI to provide a GPT. Microsoft could bring the technology in house and adapt it to its own needs.

And it likely started doing this several years ago, following its initial investment in OpenAI in 2019. As Microsoft became more convinced of the value of GPTs as a computer user interface, it began introducing what it called “Copilots” in its various software offerings, as I discussed in February 2023.

At the same time, Microsoft invested heavily in its Azure cloud infrastructure to support hosting the GPTs in the cloud. This helped fuel Nvidia’s Data Center segment growth, as I discussed in May 2023. Microsoft became the exclusive cloud provider to OpenAI.

The physical computing requirements to host ChatGPT and similar GPTs played naturally into Microsoft’s strength in commercial cloud services. Even as Windows seemed to stall as a growth engine, cloud hosting of AI services drove Microsoft’s growth through 2023.

At the time, it appeared to me that Nadella, who always seemed much more interested in cloud computing, was losing interest in Windows. I was wrong. Nadella had a plan to rejuvenate Windows, and that became the Copilot+ PC.

Realizing that on device AI required hardware acceleration on the PC processor, Microsoft mandated that processors for the new Copilot+ PC have Neural Processing Units (NPUs) with a specific amount of AI compute power.

Qualcomm (QCOM) was the first out of the gate with Snapdragon X Elite, launched at the Snapdragon Summit in October 2024. That Microsoft chose an ARM processor as the basis of its new Surface Pro tablet and Surface Laptop speaks volumes about the state of x86 processor competitiveness with Apple Silicon.

It was a risky move. The ability (or lack thereof) to run legacy Windows software has always been the Achilles heel of Windows on ARM devices. Nevertheless, Microsoft plunged ahead and unveiled the new Snapdragon powered Surface devices (the first Copilot+ PCs) at a subdued keynote hosted by Nadella on the eve of the Build Conference on May 20.

It was claimed that the new hardware platform was the only way that Microsoft could offer the various unique features of the Copilot+ PC. The Copilot+ PC, as currently configured, is simply a laptop powered by a processor with an NPU.

Microsoft

Microsoft’s claims for the Copilot+ PC. Source: Microsoft via YouTube.

Almost by definition, the Copilot+ PC can’t be the most powerful PC in the world, and its supposedly exclusive features could be implemented on almost any Windows desktop or laptop computer with the appropriate GPU. But this is how Microsoft has chosen to stimulate sales in a product category (ultralight laptops) increasingly overshadowed by Apple’s laptops and tablets powered by Apple Silicon.

Thus, Microsoft has endowed Copilot+ PCs with a number of AI features that it is withholding from the larger community of Windows (even Windows 11) PCs. This is particularly annoying since Microsoft made such a big deal of adding additional hardware requirements in order to qualify for Windows 11.

So what are the exclusive features of Copilot+? They’re actually pretty interesting and useful. Perhaps the most useful, and the one I truly wish I had on my current Windows 11 workstation, is Recall.

Recall is a feature that is implemented exclusively on the local device using the NPU. Recall remembers your activities on the device, including file operations, content creation and web browsing. In order to find something, you’ve done in the past, you can simply query the Microsoft Copilot, and it will look for what you’re trying to find.

Application of generative AI to visual arts has become very popular. With the right AI tool, one can create images based on a simple verbal description or edit them for things like background removal. Copilot+ has a tool for that, called Cocreate.

Cocreate allows you to add various effects to drawings as you generate them, including shadows and highlights. It also allows converting a drawing into a particular style, such as that of Van Gogh.

While Cocreate is for still images, there’s also a related tool for visual processing of video, called Studio Effects. This allows subtracting or blurring backgrounds and adding other special effects.

And Copilot+ PCs also offer any Microsoft Copilot features that are cloud based. These include text creation and image creation tools, and a future implementation of the very powerful GPT-4o, which provides natural speech recognition and a natural sounding synthesized voice.

Here’s the rub. It’s not clear when the OS will resort to cloud AI and when it will be exclusively on the device. It seems the only thing guaranteed to be on-device is Recall. In general, Microsoft Copilot appears to be mostly cloud-based, which means that a lot of user data gets sent to the cloud.

How that data is handled and protected remains somewhat mysterious. Microsoft wants to make the user AI experience seamless, but that may not be the best thing from a privacy standpoint.

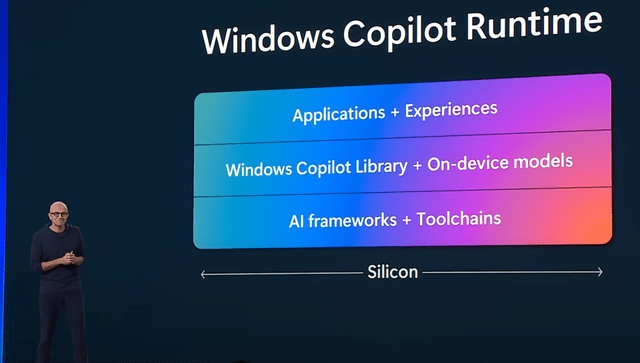

Powering the on-device AI is a new Windows Copilot Runtime:

Microsoft

Microsoft has more than 40 models that can run locally for different types of tasks. At the Build keynote, Nadella made an interesting statement:

What Win32 was to the graphical user interface, Copilot Runtime will be to AI.

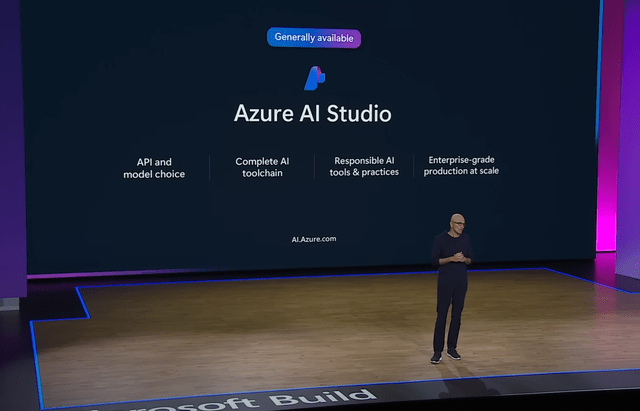

So clearly, Nadella expects that AI will be the new frontier in computer user interfaces. Microsoft is also moving quickly to provide developers with the tools to create cloud-based AIs:

Microsoft

This combination of on-device and cloud-based AI, both accessible to developers, is enormously powerful. It allows developers to create applications that can partake of both cloud and local computing as needed to implement the AI service or application.

And if this isn’t enough of an attraction for developers, there’s GitHub Copilot. GitHub Copilot goes well beyond mere code completion. With this Copilot, the aspiring developer can simply write a plain English description of what the code should do, and the Copilot will generate the code from scratch.

And Microsoft is endowing Copilots with the ability to become agents, presumably on behalf of the user. This means that Copilots can perform actions rather than simply find or summarize data on the computer.

Apple’s “AI for the rest of us”

The WWDC keynote introduced Apple’s approach to AI, called Apple Intelligence, which mostly features on-device AI. This does amount to making a virtue of a necessity, but it takes advantage of Apple’s greater experience with its own Neural Engine. As mentioned above, Neural Engines have been a feature of every Apple chip since the A11 Bionic.

It also takes advantage of the shared memory architecture of the M series chips for Apple Silicon Macs. Since the GPU has the potential to access a memory space as large as 128 GB (on the M3 Max) this makes the GPU useful as a generative AI accelerator along with the Neural Engine.

And Apple hasn’t tried to leverage the new features of Apple Intelligence to compel hardware upgrades. Apple Intelligence will be available for all Macs with M series processors, all iPads with M series processors, the Vision Pro, and the iPhone 15 Pro with the A17 processor.

What you get with Apple Intelligence (I’ll just call it AI) is much the same as the Copilot+ PC:

Apple

The writing tools appear to be very comprehensive and can be used to generate or modify text in almost any application that calls for text entry, including Messages and Mail. But the image tool, called Image Playground, seems to be merely a toy compared to what’s already available on the web.

Once again, this seems to be a limitation due to having to run on the local device. Apple does offer some AI-based photo editing and video editing tools within its Photos app.

Apple doesn’t seem to offer anything equivalent to Recall, although it sounded as though Apple was trying eventually to endow Siri with that capability. Siri will now be generative AI based and be able to converse more naturally with the user, as well as show awareness of the user’s screen and other user data. Siri seems intended as a universal Copilot, but, no surprise, many features aren’t ready.

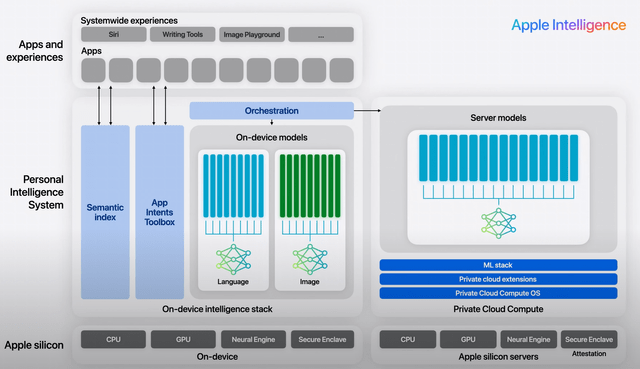

As much as Apple emphasized on-device AI for privacy and security, it had to bow to the technical imperatives of generative AI. When on-device AI isn’t enough, Apple will move the task to its own servers built on Apple Silicon. When the AI workload shifts to the Apple Private Cloud, it minimizes the transfer of user data, which is end-to-end encrypted, and no data is saved after the task is completed.

Apple

The fact that Apple even has servers based on its own Apple Silicon was something of a revelation, although it’s been speculated for some time. Given the efficiency of Apple Silicon, it only makes sense that Apple would use it for its cloud-based AI offering.

However, Apple doesn’t afford developers the opportunity to develop for its Private Cloud. While this is somewhat limiting, it does protect the integrity of the Private Cloud.

Even Apple’s Private Cloud can’t implement a ChatGPT, so Apple provides access to OpenAI’s GPT-4o via Siri. Siri always asks before accessing GPT-4o, and no OpenAI user account is needed for it. However, Apple can’t be responsible for what gets sent to the AI.

Apple developers needn’t envy GitHub Copilot. The next version of Xcode will fully integrate AI writing tools. These include code completion and even the generation of large blocks of code based on a plain English description.

Investor takeaways: No clear winner in AI enhanced operating systems, yet

Despite the lack of a full-fledged GPT, Apple has pulled roughly even with Microsoft in the competition for the AI computer interface of the future. What Apple lacks in a GPT, it makes up for with Private Cloud Compute, and the superior on-device AI performance of Apple Silicon.

I know that there have been a lot of claims made on behalf of the Snapdragon X Elite, that it was faster than M3. But M3 is just the base model of the third generation M series, and X Elite can’t compete with M3 Pro or Max. Such inconvenient comparisons never seem to get made.

And the M4 series promises to be enormously powerful, based on the performance of the new iPad Pros. So it comes down to Microsoft, superior in the cloud, Apple, superior on the device.

In terms of market impact, I give the edge to Apple. Microsoft now has to build a new constituency for Copilot+ PC devices, whereas Apple will be able to retrofit Apple Intelligence to a wide array of devices. And going forward, all Apple devices, iPhone, iPad, Vision Pro, and Mac will support Apple Intelligence with a very consistent user experience.

We may be on the verge of a new PC renaissance, in which the PC ceases to be merely a useful device and becomes a useful companion. I remain long Apple and Microsoft and rate them Buys.

Read the full article here

Leave a Reply