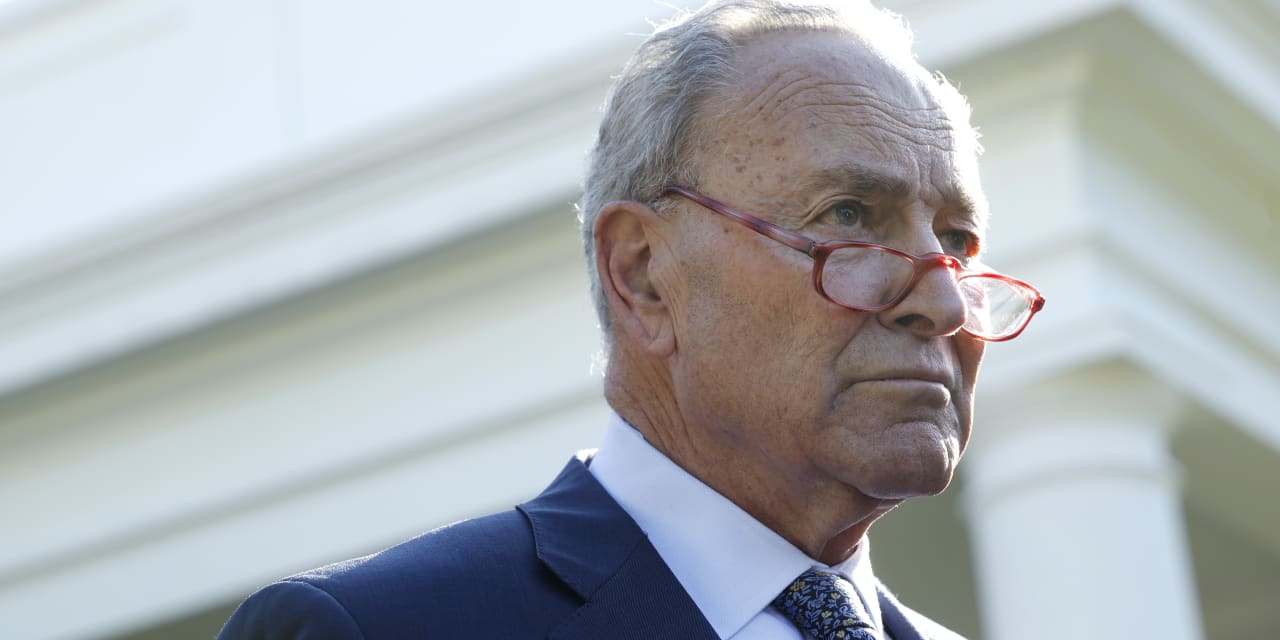

Tech CEOs including Elon Musk and Mark Zuckerberg headed to Capitol Hill on Wednesday to participate in Sen. Majority Leader Chuck Schumer’s first AI Insight Forum, giving lawmakers a chance to hear from the industry itself.

Lawmakers are trying to produce rules and guardrails around artificial intelligence, a booming technology that has raised questions about its potential to replace human workers and cause harm through misuse.

Schumer (D., N.Y.) said the inaugural forum would be a candid debate about how Congress can tackle the opportunities and challenges of AI, and that the discussion will inform lawmakers as they draft legislation.

Any candor will be of limited use to the public, though. The event is taking place behind closed doors.

Almost since the launch of OpenAI’s ChatGPT chatbot late last year, Congress, academics, and tech companies have been struggling with the question of how and whether to regulate generative artificial intelligence and large language models.

“AI is here to stay,” Schumer said in his opening remarks. “Congress must play a role, because without Congress we will neither maximize AI’s benefits nor minimize its risks. We know this won’t be easy.”

CEOs who attended said they recognize there is a consensus that there should be AI regulation. Musk told CNBC as he left Capitol Hill that the consequences of AI going wrong are severe and that the industry and lawmakers need to be proactive. But he also said it will be a process.

“The sequence of events will not be jumping into the deep end and making rules,” Musk said.

Schumer called for action on AI that prioritizes innovations for everything from curing disease to making businesses more efficient to protecting security, but also prevents AI from going off track.

The 22 confirmed attendees for Wednesday’s event made up a who’s who in the booming AI sector, including Sam Altman, the CEO of OpenAI, which shook up the industry after releasing its ChatGPT generative AI chat bot.

Other confirmed attendees included Microsoft (ticker: MSFT) CEO Satya Nadella, who has led the software maker’s billions of dollars of investments in OpenAI,

Nvidia

(NVDA) CEO Jensen Huang, Alphabet (GOOGL) CEO Sundar Pichai,

Tesla

‘s (TSLA) Musk,

Meta Platforms

‘ (META) Zuckerberg and Alex Karp from

Palantir

(PLTR). Labor leaders and academics are also on the attendee list.

Amazon.com (AMZN) CEO Andy Jassy and Amazon Web Services CEO Adam Selipsky were invited but had scheduling conflicts.

This wasn’t the first AI event this week on Capitol Hill. On Tuesday, a subcommittee of the Senate Judiciary Committee heard testimony from Nvidia’s chief scientist William Dally and

Microsoft

‘s vice chair and president Brad Smith.

Subcommittee co-leaders Sen. Richard Blumenthal (D., Conn.) and Sen. Josh Hawley (R., Mo.), have issued a one-page legislative framework for regulating AI, including registration and licensing requirements with an independent oversight agency for sophisticated AI models and export controls to limit the transfer of technology.

While supportive of new efforts to ensure AI is developed ethically and responsibly, Nvidia’s Dally tried to calm fears associated with the unforeseen consequences of AI.

“Fortunately, uncontrollable artificial general intelligence is science fiction, not reality,” Dally said. “We humans will always decide how much decision-making power to cede to AI models. The AI models will never seize power by themselves.”

IBM

(ticker: IBM) CEO Arvind Krishna, who is also attending the forum, offered a similar view in an interview with Barron’s earlier this year.

Krishna said that people are using nightmare scenarios to argue for strict regulation of AI. While he agrees on the need for about how and where AI models get used, he cautioned against aggressive regulation. “We don’t want to have regulation on innovation.” he says. “All you are going to do is give an advantage to those who choose to ignore the regulation and those who work outside the U.S. boundaries.”

Palantir

‘s Karp told investors last year that any pause in AI development would hurt national security. That “would be to indulge in the fantasy of a world without conflict,” he wrote in a shareholder letter. “The applications of these newest forms of artificial intelligence have been and will continue to be determinative on the battlefield. Others can debate the merits of proceeding with the development of these technologies. But we will not stand still while our adversaries move ahead.”

Separately, a subcommittee of the Senate Commerce and Science Committee held a hearing on how AI companies can be more transparent and improve public trust.

Also on Tuesday, the Biden Administration secured commitments from eight more big-name companies to make artificial intelligence safe.

Adobe (ticker: ADBE), Nvidia, Palantir, Salesforce (CRM), and IBM joined others who have pledged “to help advance the development of safe, secure and trustworthy AI,” the White House said.

Cohere, Scale AI, and Stability also made the commitment. Seven others, including Amazon, Meta, and Microsoft, agreed to the guidelines in July.

Those who joined the initiative agreed to ensure products are safe before making them public, put security first, and earn the public’s trust. They also agreed to share information on the potential dangers from AI and develop ways to let people know when content is AI-generated.

Write to Janet H. Cho at [email protected]

Read the full article here

Leave a Reply